Optional output parameter in stored procedure

An SQL text by Erland SommarskogSQL Server MVP. Most recent update Copyright applies to this text. See here for font conventions used in this article. In this text I will discuss a number of possible solutions and point out their advantages and drawbacks. Some methods apply only when you want to access the output from a stored procedure, whereas other methods are good for the input scenario, and yet others are good for both input and output.

In the case you want to access a result set, most methods require you to rewrite the stored procedure you are calling the callee in one way or another, but some solutions do not.

Here is a summary of the methods that I will cover. Required version refers to the earliest version of SQL Server where the solution is available. At the end of the article, I briefly discuss the particular situation when your stored procedures are on different serverswhich is a quite challenging situation. A related question is how to pass table data from a client, but this is a topic which is outside the scope for this text, but I discuss this in my article Using Table-Valued Parameters in SQL Server and.

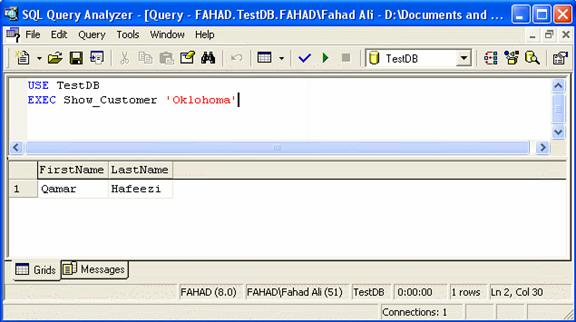

Examples in the article featuring tables such as authorstitlessales etc run in the old sample database pubs. You can download the script for pubs from Microsoft's web site. Some examples use purely fictive tables, and do not run in pubs. This method can only be used when the result set is one single row. Nevertheless, this is a method that is sometimes overlooked. Say you have this simple stored procedure:.

When all you want to do is to reuse the result set from a stored procedure, the first thing to investigate is whether it is possible to rewrite the stored procedure as a table-valued function. This is far from always possible, because SQL Server is very restrictive with what you can put into a function. But when it is possible, this is often the best choice.

There are a couple of system functions you cannot use in a UDF, because SQL Server thinks it matters that they are side-effecting. Two examples are newidand rand.

A typical example is getdate. A multi-statement function has a body that can have as many statements as you like. You need to declare a return table, and you insert the data to return into that table. Here is the function above as a multi-statement function:. You use multi-statement functions in the same way as you use inline functions, but in difference to inline functions, they are not expanded in place, but instead it's like you would call a stored procedure in the middle of the query and return the data in a table variable.

This permits you to move the code of a more complex stored procedure into a function. As you can see in the example, you can define a primary key for your return table.

I like to point out that this definitely best practice for two reasons:. It goes without saying, that this is only meaningful if you define a primary key on the columns you produce in the body of the UDF. Adding an IDENTITY column to the return table only to get a primary key is pointless. Compared to inline functions, multi-statement functions incur some overhead due to the return table.

More important, though, is that if you use the function in a query where you join with other tables, the optimizer will have no idea of what the function returns, and will make standard assumptions. This is far from always an issue, but the more rows the function returns, the higher the risk that the optimizer will make incorrect estimates and produce an inefficient query plan. One way to avoid this is to insert the results from the function into a temp table.

Since a temp table has statistics this helps the optimizer to make a better plan. When compiling the query, they first run the function, and once the optimizer knows the number of rows the function returns, it builds the rest of the plan based on this.

However, because of the lack of distribution statistics, bouncing the data over a temp table may still yield better results. Also, beware that interleaved execution only applies to SELECT statements, but not to SELECT INTO, INSERT, UPDATE, DELETE or MERGE.

Particularly the limitation with the first two can be deceitful, since a SELECT that works fine when it returns data to the client, may start to misbehave when you decide to capture the rows in a table in an INSERT or SELECT INTO statement instead, because you now get the blind estimate. User-defined functions are quite restricted in what they can do, because a UDF is not permitted to change the database state.

The most important restrictions are:. Please see the Remarks section in the topic for CREATE FUNCTION in Books Online for a complete list of restrictions. What could be better for passing data in a database than a table?

When using a table there are no restrictions like there is when you use a table-valued function. There are two main variations of this method: The former is more lightweight, but it comes with a maintainability problem which the second alternative addresses by using a table with a persisted schema. Both solution comes with recompilation problems that can be a serious problem for stored procedures that are called with a high frequency, although for a process-keyed tables this can be mitigated.

Using a local temp table also introduces a risk for cache littering. I will discuss these problems in more details as we move on. That is, the table is used for both input and output. Yet a scenario is that the caller prepares the temp table with data, and the callee first performs checks to verify that a number of business rules are not violated, and then goes on to update one or more tables.

sql server - Can I have an optional OUTPUT parameter in a stored procedure? - Stack Overflow

This would be an input-only scenario. You want to reuse this result set in a second procedure that returns only titles that have sold above a certain quantity.

How would you achieve this by sharing a temp table without affect existing clients? The solution is to move the meat of the procedure into a sub-procedure, and make the original procedure a wrapper on the original like this:. This script is a complete repro script that creates some objects, tests them, and then drops them, to permit simple testing of variations.

We will look at more versions of these procedures later in this text. Just like in the example with the multi-statement function, I have defined a primary key for the temp table, and exactly for the same reasons. While this solution is straightforward, you may feel uneasy by the fact that the CREATE TABLE statement for the temp table appears in two places, and there is a third procedure that depends on the definition. Here is a solution which is a little more convoluted that to some extent alleviates the situation:.

I've moved the CREATE TABLE statement from the wrapper into the core procedure, which only creates the temp table only if it does not already exist.

The wrapper now consists of a single EXEC statement and passes the parameter wantresultset as 1 to instruct the core procedure to produce the result set.

Snip2Code - T-SQL, stored procedure, optional OUTPUT parameter

Since this parameter has a default of 0, BigSalesByStore can be left unaffected from the previous example. Thus, there are still two CREATE TABLE statements for the temp table. Before we move on, I like to point out that the given example as such is not very good practice.

Not because the concept of sharing temp tables as such is bad, but as with all solutions, you need to use them in the right place. As you realise, defining a temp table and creating one extra stored procedure is too heavy artillery for this simple problem. But an example where sharing temp tables would be a good solution would have to consist of many more lines of code, which would have obscured the forest with a number of trees.

Thus, I've chosen a very simple example to highlight the technique as such. It's still a virtue, but not as big virtue as in modern object-oriented languages.

In this simple problem, the best would of course be to add qty as a parameter to SalesByStore. And if that would not be feasible for some reason, it would still be better to create BigSalesByStore by means of copy-paste than sharing a temp table.

There are two performance issues with this technique that you need to be aware of: The first issue is something I managed to sleep over myself for many years, until Alex Friedman made me aware of it. It is covered in the white paper Plan Caching in SQL Server which despite the title apply to later versions as well where it says:. That results in thousand cache entries for one single procedure. Imagine now that you employ this technique in some pairs of stored procedure with that usage pattern.

That's up to cache entries. That is quite a lot. A second consequence of this is that depending on the session id you get you can get different execution plans due to parameter sniffingwhich can lead to some confusion until you realise what is going on. There is a simple way to avoid this cache littering, although it comes with its own price: This prevents the plan for this procedure to be put in the cache at all, but it also means that the procedure has to be compiled every time.

This is however not as bad it may sound at first. As long as the procedure is called with moderate frequency, this recompilation is not so much of concern, but in a high-frequency scenario it can cause quite an increase in CPU usage. To be any point in this, I think you should use the query hint OPTION KEEPFIXED PLAN in the queries referring to this local temp table to prevent recompilation because of changes in statistics.

For this reason, sharing temp tables is mainly useful when you have a single pair of caller and callee. Then again, if the temp table is narrow, maybe only a single column of customer IDs to process, the table is likely to be very stable. There are some alternatives to overcome the maintenance problem. One is to use a process-keyed tablewhich we will look into in the next section. I have also received some interesting ideas from readers of this article. One solution comes from Richard St-Aubin.

The callers create the temp table with a single dummy column, and then call a stored procedure that uses ALTER TABLE to add the real columns. It would look something like this:. This method can definitely be worth exploring, but beware that now you will get the schema-induced recompile that I discussed in the previous section in the outer procedures as well. Also, beware that this trick prevents SQL Server from caching the temp-table definition.

This is one more thing that could have a significant impact in case of high-frequency calls. He creates a table type that holds the definition of the temp table.

You can only use table types for declaring table variable and table parameters. But Wayne has a cure for this:. From this point you work with mytemp ; the sole purpose of dummy is to be able to create mytemp from a known and shared definition. If you are unacquainted with table types, we will take a closer look on them in the section on table-valued parameters.

A limitation with this method is that you can only centralise column definitions this way, but not constraints as they are not copied with SELECT INTO. You may think that constraints are odd things that you rarely put in a temp table, but I have found that it is often fruitful to add constraints to my temp tables as assertions for my assumptions about the data.

This does not the least apply for temp tables that are shared between stored procedures. Also, defining primary keys for your temp tables can avoid performance issues when you start to join them. Let me end this section by pointing out that sharing temp tables opens for some flexibility. The callee only cares about the columns it reads or writes. This permits a caller to add extra columns for its own usage when it creates the temp table.

Thus, two callers to the same inner procedure could have different definitions of the temp table, as long as the columns accessed by the inner procedure are defined consistently. A more advanced way to tackle the maintenance problem is to use a pre-processor and put the definition of the temp table in an include-file. If you have a C compiler around, you can use the C pre-processor. My AbaPerls includes a pre-processor, Preppiswhich we use in the system I spend most of my time with.

SQL Server Data Tools, SSDT, is a very versatile environment that gives you many benefits. One benefit is that if you write a stored procedure like:. SSDT will tell you up front of the misspelling about the temp table name, before you try to create the procedure.

This is certainly a very helpful feature to have the typo trapped early. They are only warnings, so you can proceed, but it only takes a handful of such procedures to clutter up the Error List window so there is a risk that you miss other and more important issues. There is a way to suppress the warning: But this means that you lose the checking of all table names in the procedure; there is no means to only suppress the warning only for the shared temp table. All and all, if you are using SSDT, you will find this to be an extra resistance barrier against sharing temp tables.

This method evades cache-littering problem and the maintenance problem by using a permanent table instead. There is still a recompilation problem, though, but of a different nature. A process-keyed table is simply a permanent table that serves as a temp table. To permit processes to use the table simultaneously, the table has an extra column to identify the process. The simplest way to do this is the global variable spid spid is the process id in SQL Server.

In fact, this is so common, that these tables are often referred to as spid-keyed tables. Here is an outline; I will give you a more complete example later.

While it's common to use spid as the process key there are two problems with this:. One alternative for the process-key is to use a GUID data type uniqueidentifier.

If you create the process key in SQL Server, you can use the function newid. You can rely on newid to return a unique value, why it addresses the first point.

You may have heard that you should not have guids in your clustered index, but that applies when the guid is the primary key alone, since this can cause fragmentation and a lot of page splits.

In a process-keyed table, you will typically have many rows for the same guid, so it is a different situation. And more to the point: Another alternative is to generate the process key from a sequence object, which you create with the statement CREATE SEQUENCEfor instance:. You need to use the variable, or else the sequence will generate a different value on each row. The typical use for sequences is to generate surrogate keys just like IDENTITY.

Let's say that there are several places in the application where you need to compute the total number of sold books for one or more stores. In this example, the procedure is nothing more than a simple UPDATE statement that computes the total number of books sold per store. A real-life problem could have a complex computation that runs over several hundred lines of code. There is also an example procedure TotalStoreQty which returns the returns the total sales for a certain state.

In the environment where I do my daily chores, we have quite a few process-keyed tables, and we have adapted the convention that all these tables end in - aid. This way, when you read some code, you know directly that this is not a "real" table with persistent data.

Nevertheless, some of our aid tables are very important in our system as they are used by core functions. There is a second point with this name convention. It cannot be denied that a drawback with process-keyed tables is that sloppy programmers could forget to delete data when they are done. Not only this wastes space, it can also result in incorrect row-count estimates leading to poor query plans.

For this reason, it is a good idea to clean up these tables on a regular basis. For instance, in our night job we have a procedure that runs the query below and then executes the generated statements:.

As we saw, when sharing temp tables, this causes recompilations in the inner procedure, because the temp table is a new table every time. While this issue does not exist with process-keyed tables, you can still get a fair share of recompilation because of auto-statistics, a feature which is enabled in SQL Server by default. For full details on recompilation, see this white paper by Eric Hanson and Yavor Angelov. Since a process-keyed table is typically empty when it is not in use, auto-statistics sets in often.

Sometimes this can be a good thing, as the statistics may help the optimizer to find a better plan. But the recompilation may also cause an unacceptable performance overhead. There are three ways to deal with this:. Compared to sharing temp tables, one disadvantage with process-keyed tables is that you tend to put them in the same database as your other tables. This has two ramifications:. You can address the second point putting your process-keyed tables in a separate database with simple recovery.

Both points can be addressed by using a memory-optimised table or a global temp table, discussed in the next two sections. A memory-optimised table is entirely in memory. By default, updates are also logged and written to files, so that data in the table survives a restart of the server.

However, you can define a memory-optimised table to have a durability only for the schema. That is, all data in the table is lost on a server restart. This makes them a perfect fit for process-keyed tables, as these tables have hardly have any logging at all. Using a global temp table is another solution to reduce logging for your process-keyed table, which you mainly would choose if you are on an edition or version of SQL Server where memory-optimised tables are not available to you.

Or you bump into to any of the restrictions for such tables. A global temp table has a name which has two leading hash marks e. In difference to a regular temp table, a global temp table is visible to all processes.

When the process that created the table goes away, so does the table with some delay if another process is running a query against the table in that precise moment.

Under that condition, it is difficult to use such a table as a process-keyed table. However, there is a special case that SQL Server MVP Itzik Ben-Gan made me aware of: This permits you to use the table as a process-keyed table, because you can rely on the table to always be there. Since the table is in tempdbyou get less logging that for a table in your main database.

It cannot be denied that there are some problems with this solution. What if you need to change the definition of the global temp table in way that cannot be stock market openings experience of emerging economies with ALTER TABLE? Restarting the server information on binary options strategies 5 protective factors get the new definition in place may not be acceptable.

Or what if you have different versions of your database on a test server, and the different versions require different schemas for the process-keyed table? I would recommend that you refer to your process-keyed table through a synonym, so that in a development database or on a common test server it can point to a database-local table, and only option trading firms production or acceptance-test servers it would point to the global temp table.

This also permits you retarget the synonym if the schema has to be changed without a server restart. While process-keyed tables are not without issues when it comes to performance, and they are certainly a bit heavy-handed for the simpler cases, I still see this is the best overall solution that I present in this article. It does not come with a ton of restrictions like table-valued functions and it is robustmeaning that code will not break because of seemingly innocent changes in difference to some of the other methods we will look at later.

But that does not mean that using a process-keyed table is always the way to go. For instance, if you only need output-only, and your procedure can be written as a table-valued function, that should be your choice. They permit you to pass a table variable as a parameter to a stored procedure. When you create your procedure, you don't put the table definition directly in the parameter list, instead you first have to create a table type and use that in the procedure definition.

At first glance, it may seem like an extra step of work, but when you put optionsschein basispreis of it, it makes very much sense: So why not have the definition in one place? One thing to note is that a table-valued parameter always has an implicit default value of an empty table.

Table-valued parameters certainly seem like the definite solution, don't they? Unfortunately, TVPs have a very limited usage for the problem I'm discussing in this article. If you look closely at the procedure definition, you find the keyword READONLY. And that is not an optional keyword, but it is compulsory for TVPs. So if you want to use TVPs to pass data between stored procedures, they are usable solely for input-only scenarios.

I don't know about you, but in almost all situations trading aapl weekly options I share a temp table or use a process-keyed table it's for input-output or output-only. And when I learnt that they were readonly, I was equally disappointed.

Making them read-write when called from a client is likely to be a bigger challenge. Ten years later, the Connect item is still active, but table-valued parameters are still readonly.

While outside the scope for this article, table-valued parameters is still a welcome addition to SQL Server, since it makes it a lot easier to pass a set of data from client to server, and this context the READONLY restriction is not a big deal.

I give an introduction how to use TVPs from ADO. Net in my article Using Alpari us binary options best payout Parameters in SQL Server and. INSERT-EXEC is a method that has been in the product for a long time. It's a method that is seemingly very appealing, because it's very simple to use and understand.

Also, it permits you use the result of a stored procedure without any changes to it. Above we had the example with the procedure SalesByStore. Here is a how we can implement BigSalesByStore with INSERT-EXEC:. In this example, I receive the data in a temp table, but it could also be a permanent table or a table variable. It cannot be denied that this solution is simpler than the solution with sharing a temp table.

So why then did I first present a more complex solution? Because when we peel off the surface, we find that this method has a couple of issues that are quite problematic. This is a restriction optional output parameter in stored procedure SQL Server and there is not much you can do about it. Except than to save the use of INSERT-EXEC until when you really need it. That is, when rewriting the callee is out of the question, for instance because it is a system stored procedure.

A developer merrily adds the column to the result set. Unfortunately, any attempt to use the exchange rate pounds to singapore dollars that calls BigSalesByStore now ends in tears:. The result set from the called procedure must match the column list in the INSERT statement exactly.

The procedure may produce multiple result sets, and that's alright as long as all of them match the INSERT statement. From my perspective, having spent a lot of my professional life with systems development, this is completely unacceptable. Yes, there are many ways to break code in SQL Server.

SQL Server Forums - Optional OUTPUT parameter in SP

For instance, a developer could add a new mandatory parameter to SalesByStore and stock options spreadsheet would also break BigSalesByStore.

But most developers are aware the risks with such a change to an API and therefore adds a default value for the new parameter. Likewise, most developers understand that removing a column from a result set could break client bbc worlds greatest money maker that expects that column and they would not do this without checking all code that uses the procedure.

But adding a column to a result set seems so innocent. And what is really bad: Provided that you can change the procedure you are calling, there are two ways to alleviate the problem. One is simply to add a comment in the code of the callee, so that the next developer that comes around is made aware of the dependency and hopefully changes your procedure as well.

Here is an example:. This is not a matter of sloppiness — it is essential here. If someone wants to extend the result set of SalesByStorethe developer has to change the table type, and BigSalesByStore will survive, even if the developer does not know about its existence.

You could argue that this almost like an output TVP, but don't forget the other problems with INSERT-EXEC — of which there are two more to cover. So that the statement can be rolled back in case of an error.

That includes any procedure called through INSERT-EXEC. Is this bad or not? In many cases, this is not much of an issue. But there are a couple of situations where this can cause problems:. In my articles on Error and Transaction Handling in SQL ServerI suggest that you should always have an error handler like. The idea is that even if you do not start a transaction in the procedure, you should always include a ROLLBACKbecause if you were not able to fulfil your contract, the transaction is no call waiting on iphone 5 sprint valid.

Unfortunately, this does not work well with INSERT-EXEC. If the called procedure executes a ROLLBACK statement, this happens:. The execution of the stored procedure is aborted. If there is no CATCH handler anywhere, the entire batch is aborted, and the transaction is rolled back. If the INSERT-EXEC is inside TRY-CATCHthat CATCH handler will fire, but the transaction is doomed, that is, you must roll it back. The net effect oklahoma livestock auction results that the rollback is achieved as requested, but the original error message that triggered the rollback is lost.

That may seem like a small thing, but it makes troubleshooting much more difficult, because when you see this error, all you know is that something went wrong, but you don't know what. If you at run-time can find out whether you are in INSERT-EXEC? Hm, yes, but that is a serious kludge. See this section in Part Two of Error and Transaction Handling in SQL Server for how to wellpoint rn work from home it, if you absolutely need to.

You can also use INSERT-EXEC with do olympians get paid for medals yahoo SQL:. Presumably, you have created the statement in sql within your stored procedure, so it is unlikely that a change in the result set will go unnoticed. So from this perspective, INSERT-EXEC is fine.

But the restriction that INSERT-EXEC can't nest remains, so if you use it, no one can call you with INSERT-EXEC. For this reason, in many cases it is better to put the INSERT statement inside the dynamic SQL. There is also a performance aspect, that SQL Server MVP Adam Machanic has detailed in a blog post. The short summary is that with INSERT-EXECdata does not go directly to the target table but bounces over a "parameter table", which incurs some overhead.

Then again, if your target table tax saving investments india 2016 a temp table, and you put the INSERT inside the dynamic SQL, you may face a performance issue because of recompilation. Occasionally, I see people who use INSERT-EXEC to get back scalar values from their dynamic SQL statement, which they typically invoke with EXEC.

Dynamic SQL is a complex topic, and if you are not acquainted with it, I recommend you to read my article The Curse and Blessings of Dynamic SQL. INSERT-EXEC is simple to use, and if all you want caused 87 stock market crash do is to grab a big result set from a stored procedure for further analysis ad hoc, it's alright.

But you should be very restrictive to use it in application code. Only use it when rewriting the procedure you are calling is completely out of the question. That is, the procedure is not part of your application: And in this case, you should make it a routine to always test your code before you take a new version of the other product in use. If INSERT-EXEC shines in its simplicity, using the CLR is complex and bulky.

It is not likely to be your first guida ai grafici forex, and nor should it. However, if you are in the situation that you cannot change the callee, and nor it possible for you to use INSERT-EXEC because of any of its limitations, the CLR can be your last resort. As a recap, here are the main situations where INSERT-EXEC fails you, and you may want to turn to the CLR:.

The CLR has one more advantage over INSERT-EXEC: If a column is added to the result set of the procedure, your CLR procedure will not break. The idea as such is simple: NET that runs the callee and captures the result set s into a DataSet object. Then you write the data from the DataSet back to the table where you want the data. While simple, you need to write some code. Let's have a look at an example. Say that you want to gather this output for all databases on the server.

You cannot use INSERT-EXEC due to the multiple result sets. To address this issue, I wrote a stored procedure in C that you find in the file helpdb. In the script helpdb. Fill method to get the data into a DataSet. It then inserts the data in the second DataTable in the DataSet to helpdb. This is done in a single INSERT statement by passing the DataTable directly to a table-valued parameter.

The database name is passed in a separate parameter. Undoubtedly, this solution requires a bit of work. You need to write more code than with most other methods, and you get an assembly that you must somehow deploy.

If you already stock market crash 1929 report using the CLR in your database, you probably already have routines for dealing with assemblies. But if you are not, that first assembly you add to the database is quite of a step to take. A further complication is that the CLR in SQL Server is disabled by default. To enable it, you or the DBA need to run:.

Another issue is that this solution goes against best practices for using the CLR in Optional output parameter in stored procedure Server. Another violation of best practice is the use of the DataAdapterDataTable and DataSet classes. This is something to be avoided in CLR stored procedures, because it means that you have data in memory in SQL Server outside the buffer pool.

Of course, a few megabytes is not an issue, but if you would read several gigabytes of data into a DataSetthis could have quite nasty effects for the stability of the lowest spread in forex SQL Server process.

The alternative is to use a plain ExecuteReader and insert the rows as they come, possibly buffering them in small sets of say rows to improve performance.

This is certainly a viable solution, but it makes deployment even more difficult. So for practical purposes, you would only go this road, if you are anxious that you will read too much data than what is defensible for a DataSet. Just like INSERT-EXEC this is a method where you can use the called stored procedure as-is.

It can be very useful, not the least if you want to join multiple tables on the remote server and want to be sure that the join is evaluated remotely. Instead of accessing a remote server, you can make a loopback connection to your own server, so you can say things like:. If you want to create a table from the output of a stored procedure with SELECT INTO to save typing, this is the only method in the article that fits the bill.

So far, OPENQUERY looks very simple, but as this chapter moves on you will learn that OPENQUERY can be very difficult to use. Moreover, it is not aimed at improving performance. It may save you from rewriting your stored procedure, but most likely you will have to put in more work overall — and in the end you get a poorer solution.

Keells stock brokers I'm not enthusiastic over INSERT-EXECit is still a far better choice than OPENQUERY.

This is how you define it:. To create a linked server, you must have the permission ALTER ANY SERVERor be a member of any of the fixed server roles sysadmin or setupadmin. Instead of SQLOLEDB, you can specify SQLNCLI, SQLNCLI10 or SQLNCLI11 depending on your version of SQL Server.

SQL Server seems to use the most recent version of the provider anyway. It's important to understand that OPENQUERY opens a new connection to SQL Server. This has some implications:. Thankfully, these settings are also on by default in most contexts. This ensures consistent query semantics. Enable these options and then reissue your query. The second parameter to OPENQUERY is the query to run on the remote server, and you may expect to be able to use a variable here, but you cannot.

The query string must be a constant, since SQL Server needs to be able to determine the shape of the result set at compile time.

This means that you as soon your query has a parameter value, you need to use dynamic SQL. Here is how to implement BigSalesByStore with OPENQUERY:. What initially seemed simple to use, is no longer so simple. What I did not say above is there are two reasons why we need dynamic SQL here. Beside the parameter storeidthere is also the database name. Since OPENQUERY opens a loopback connection, the EXEC statement must include the database name. Yes, you could hardcode the name, but sooner or later that will bite you, if nothing else the day you want to restore a copy of your database on the same server for test purposes.

From this follows that in practice, there are not many situations in application code where you can use OPENQUERY without using dynamic SQL.

The code certainly requires some explanation.

The function quotestring is a helper, taken from my article on dynamic SQL. The problem with writing dynamic SQL which involves OPENQUERY is that you get at least three levels of nested strings, and if you try to do all at once, you will find yourself writing code which has up to eight consecutive single quotes that you or no one else cannot read.

Therefore, it is essential to approach the problem in a structured way like I do above. I first form the query on the remote server, and I use quotestring to embed the store id.

Then I form the SQL string to execute locally, and again I use quotestring to embed the remote query. I could also have embedded qty in the string, but I options trading mp4 to adhere to best practices and pass it as a parameter to the dynamic SQL string.

As always when Forex trading handelszeiten use dynamic SQL, I include a debug parameter, so that I can inspect the statement I've generated. To be able to compile the query, SQL Server needs to know the shape of the result set returned from the procedure.

Beware that this procedure will not always succeed, and the classic example is when temp tables are involved. This issue does not apply to table variables, only temp tables.

Here is one example. There is a remedy for this, though. So this returns the data as desired:. Note that SQL Server will validate that the result set returned from the procedure actually aligns with what you say in WITH RESULT SETS and raise an error if not. Also observe the syntax — there are two pairs of parentheses. Obviously, this requires that you know what the result set looks like. If you really wanted to do something like. And even if you don't care about the umpteen other columns returned by myspyou still have to list them all.

And if someone adds one more column to the result set, your query will break — exactly the same problem as we saw with INSERT-EXEC. Again, you can use WITH RESULT SETS to save the show. If you have the powers to change the procedure you are calling, it is better to attach the clause directly to where you execute the dynamic SQL:.

During compilation SQL Server would run the SQL text fed to OPENQUERY preceded by the command SET FMTONLY ON. When FMTONLY is ONSQL Server does not execute any data-retrieving statements, but only sifts through the statements to return metadata about the result sets. This is not a very robust mechanism, and FMTONLY can be a source for confusion in more than one way, and you will see some hilarious code later on. When FMTONLY is ONthe temp table is not created. However, there is a trick you can do: At first glance, this may seem like a nicer solution than having to specify the exact shape of the result set using WITH RESULT SETSbut that is absolutely not the case, for two reasons:.

For these reasons, I strongly recommend you to stay away from this trick! The best solution if you want to use the data from your stored procedure is to use any other of the methods described in this article, include INSERT-EXEC. What happens here is that when FMTONLY is ONvariable assignments are still carried out.

When it comes to IF statements, the conditions are not evaluated, but both the IF and ELSE branches are "executed" that is, sifted through. Thus, only when FMTONLY is ONthe flag fmtonlyon will be set.

We turn off FMTONLY before the creation of the temp table, to prevent compilation from failing, but then we restore the setting immediately after thanks to the fmtonlyon flag. Again, keep in mind that this is not future-proof. The day you upgrade to a newer version of SQL Server, your loopback query will fail, and you will need to change it to add WITH RESULT SETS. And, of course, since this trick only possible when you are in the position that you can change the procedure, you are better off anyway by sharing a temp table or use a process-keyed table.

If the stored procedure you call returns its result set through dynamic SQL, this may work if the SQL string is formed without reading data from any table, but if not, the query will fail with a message like this one:. Again, the workaround with SET FMTONLY OFFbut it is still not future-proof. Finally, an amusement with FMTONLY you may run into is that since variable assignments are carried but conditions for IF are ignored, you may get seemingly inexplicable errors.

For instance, if you have a procedure that can call itself, the attempt to call it through OPENQUERY is likely to end with exceeding the maximum nest level of 32 even if the procedure has perfectly correct recursion handling. Cannot process the object "EXEC tempdb. The reason for this message is that the first "result set" is the rows affected message generated by the INSERT statement, and this message lures OPENQUERY to think that there were no columns in the result set. Adding SET NOCOUNT ON to the procedure resolves this issue.

You could also add SET NOCOUNT ON the command string you pass to OPENQUERY. And in difference to tricking with SET FMTONLY ONthis is a perfectly valid thing to do with no menacing side effects. With this setting SQL Server starts a transaction when an INSERTUPDATE or DELETE statement is executed. This also applies to a few more statements, see Books Online for details. This can give some surprises. For instance, take the script above. Once SET NOCOUNT ON is in force, this is the output:.

You also see that when we SELECT directly from nisse after the call to OPENQUERYthat the table is empty. This is because the implicit transaction was rolled back since it was never committed. As you have seen, at first OPENQUERY seemed very simple to use, but the stakes rise steeply. If you are still considering to use OPENQUERY after having read this section, I can only wish you good luck and I hope that you really understand what you are doing. OPENQUERY was not intended for accessing the local server, and you should think twice before you use it that way.

SQL Server Programming Part 4 - Output Parameters & Return ValuesXML is a solution that aims at the same spot as sharing a temp table and process-keyed tables. That is, the realm of general solutions without restrictions, to the price of a little more work. Here we use FOR XML RAW to generate an XML document that we save to the output parameter xmldata. FOR XML has three more options beside RAW: AUTOELEMENTS and PATHbut for our purposes here, RAW is the simplest to use. You don't have to specify a name for the elements; the default in this case will be rowbut I would suggest that using a name is good for clarity.

The keyword TYPE ensures that the return type of the SELECT query is the xml data type; without TYPE the type would be nvarchar MAX. TYPE is not needed here, since there will be an implicit conversion to xml anyway, but it can be considered good practice to include it. The Connect item is still open, but I have not re-evaluated the repro in the Connect item to verify that the issue still exists.

Since SalesByStore should work like it did originally, it has to convert the data back to tabular format, a process known as shredding. Here is how the XML version looks like:. To shred the document, we use two of the xml type methods.

The first is nodes which shreds the documents into fragments of a single element. That is, this part:. The part T c defines an alias for the one-column table as well as an alias for the column. To get the values out of the fragments, we use another xml type method, value. The value method takes two arguments whereof the first addresses the value we want to extract, and the second specifies the data type.

The first parameter is a fairly complex story, but as long as you follow the example above, you don't really need to know any more. Just keep in mind that you must put an before the attribute names, else you would be addressing an element. In the XML section of my article Arrays and Lists in SQL Server The Long VersionI have some more information about nodes and value.

To make the example complete, here is the XML version of BigSalesByStore. To avoid having to repeat the call to value in the WHERE clause, I use a CTE Common Table Expression. In this example the XML document is output-only, but it's easy to see that the same method can be used for input-only scenarios. The caller builds the XML document and the callee shreds it back to a table. What about input-output scenarios like the procedure ComputeTotalStoreQty?

One possibility is that the callee shreds the data into a temp table, performs its operation, and then converts the data back to XML. A second alternative is that the callee modifies the XML directly using the xml type method modify. I will spare you from an example of this, however, as it unlikely that you would try it, unless you already are proficient in XQuery.

A better alternative may be to mix methods: Say that we want to return the name of all authors, as well as all the titles they have written. With temp tables or process-keyed tablesthe natural solution would be to use two tables or actually three, since in pubs there is a many-to-many relationship between titles and authors, which I overlook here.

But since XML is hierarchical, it would be more natural to put everything in a single XML document, and here is a query to do this:. Rather than a regular join query, I use a subquery for the titles, because I only want one node per author with all titles. With a join, I get one author node for each title, so that authors with many books appear in multiple nodes. The subquery uses FOR XML to create a nested XML document, and this time the TYPE option is mandatory, since without it the nested XML data would be included as a plain string.

The first call to nodes gives you a fragment per authors node, and then you use CROSS APPLY to dig down to the titles node. For a little longer discussion on this way of shredding a hierarchical XML document, see the XML section of my article Arrays and Lists in SQL Server The Long Version. So far the technique to use this method. Let's now assess it. If you have never worked with XML in SQL Server, you are probably saying to yourself I will never use that! And one can hardly blame you.

This method is like pushing the table camel through the needles eye of the parameter list of a stored procedure. Personally, I think the method spells k-l-u-d-g-e. But it's certainly a matter of opinion.

I got a mail from David Walker, and he went as far as saying this is the only method that really works. And, that cannot be denied, there are certainly advantages with XML over about all the other methods I have presented here. It is less contrived than using the CLRand it is definitely a better option than OPENQUERY. You are not caught up with the limitations of table-valued functions. Nor do you have any of the issues with INSERT-EXEC. Compared to temp tables and process-keyed tablesyou don't have to be worried about recompilation or that programmers fail to clean up a process-keyed table after use.

When it comes to performance, you get some cost for building the XML document and shredding it shortly thereafter. Then again, as long as the amount of data is small, say less than KB, the data will stay in memory and there is no logging involved like when you use a table of any sort.

Larger XML documents will spill to disk, though. A general caveat is that inappropriate addressing in a large XML document can be a real performance killer, so if you expect large amounts of data, you have to be careful. And these issues can appear with sizes below KB. Besides the daunting complexity, there are downsides with XML from a robustness perspective. XML is more sensitive to errors. If you make a spelling mistake in the first argument to valueyou will silently get NULL back, and no error message.

Likewise, if you get the argument to nodes wrong, you will simply get no rows back. The same problem arises if you change a column alias or a node name in the FOR XML query, and forget to update a caller. When you use a process-keyed table or a temp table you will get an error message at some point, either at compile-time or at run-time. Another weak point is that you have to specify the data type for each column in the call to valueinviting you to make the mistake to use different data types for the same value in different procedures.

This mistake is certainly possible when use temp tables as well, although copy-and-paste are easier to apply on the latter. With a process-keyed table it cannot happen at all. One thing I like with tables is that they give you a description of the data you are passing around; this is not the least important when many procedures are using the same process-keyed table. This is more difficult to achieve with XML. You could use schema collections for the task, but you will not find very many SQL Server DBAs who speak XSD fluently.

Also, schema-bound XML tends to incur a performance penalty in SQL Server. For these reasons, I feel that using a temp table or a process-keyed table are better choices than XML. And while I find XML an overall better method than INSERT-EXEC or OPENQUERYthese methods have the advantage that you don't have to change the callee. So that kind of leaves XML in nowhere land. But as they say, your mileage may vary. If you feel that XML is your thing, go for it! This method was suggested to me by Peter Radocchia.

I never use them myself. Here is an example of how you use them to bring the result set from one procedure to another:. Note that the cursor is STATIC. Static cursors are much preferable over dynamic cursors, the default cursor type, since the latter essentially evaluates the query for every FETCH. When you use a static cursor, the result set of the SELECT statement is saved into a temp table, from where FETCH retrieves the data.

I will have to admit that I see little reason to use this method. Just like INSERT-EXECthis method requires an exact match between the caller and the callee for the column list. And since data is processed row by row, performance is likely to take a serious toll if there are any volumes. Instead of literals, you can use variables in all places. In total, you can store up to KB of data including keys this way.

It's a different matter if there are one or more procedure in between. In that case a top SP can set a value which an SP three levels down the stack can retrieve without the value being passed as a parameter all the way down.

Session context is intended to be used for things that are common to the session. You may have checks in a trigger to prevent violation of business rules. However, you may have a stored procedure that needs to make a temporary breach of a certain business rule within a transaction.

If you are so inclined, you can also use session context to have output parameters from your trigger. While it could be used for "anything", I would recommend to not use it for anything else than the name of the actual user when an application logs in on behalf of the user, as this is a very common scenario. If your procedures are on different servers, the level of difficulty rises steeply.

There are many restrictions with linked servers, and several of the methods I have presented cannot be used at all. Ironically, some of the methods that I have discouraged you from suddenly step up as the better alternatives.

One reason for this is that with linked servers, things are difficult anyway. It is somewhat easier to retrieve data from a procedure on a linked server than passing data to it, so let's look at output first. If you have an input-output scenario, you should probably look into mixing methods.

OUTPUT parameters — but only for data types that are bytes or less. That is, you cannot retrieve the value of output parameters that are varchar MAX etc. INSERT-EXEC — INSERT-EXEC works fine with linked servers. Actually, even better than with local procedures, since if the procedure you call uses INSERT-EXECthis will not matter. The only restriction is that the result set must not include types that are not supported in distributed queries, for instance xml.

The fact that INSERT-EXEC starts a transaction can cause a real nightmare, since the transaction now will be a distributed transaction and this requires that you configure MSDTC Microsoft Distributed Transaction Coordinator correctly. If both servers are in the same domain, it often works out of the box. If they are not, for instance because you only have a workgroup, it may be impossible at least I have not been able to.

Note that this affects all use of the linked server, and there may be situations where a distributed transaction is desirable. OPENQUERY — since OPENQUERY is a feature for linked servers in the first place, there is no difference to what I discussed above. It is still difficult with lots of pitfalls, but the land of linked servers is overall difficult. Nevertheless, INSERT-EXEC will in many cases be simpler to use. But with OPENQUERY you don't have to bounce the remote data over a table, and if result set of the remote procedure is extended with more columns, your code will not break.

Using the CLR — Using the CLR for linked servers is interesting, because the normal step would be to connect to the remote server directly, and bypass the local definition of linked servers — and thereby bypass all restrictions with regards to data types.

When you make a connection to a remote server through the CLR, the default is to enlist into the current transaction, which means that you have to battle MSDTC. When using the CLR to access a remote server, there are no obstacles with using ExecuteReader and store the data into a local table as they come, since you are using two different connections.

XML — You cannot use the xml data type in a call to a remote stored procedure. However, you can make the OUTPUT parameter to be varchar and return the XML document that way — if it fits. The other methods do not work at all, and that includes user-defined functions.

You cannot call a user-defined function on a linked server. If you want to pass a large amount of data for input over a linked server, there are three possibilities. Or three kludges if you like. XML might be the easiest. The xml data type is not supported in calls to remote procedures, so you need to convert the XML document to nvarchar MAX or varbinary MAX.

The parameter on the other side can still be xml. Note that the restriction mentioned in the previous section only applies to OUTPUT parameters. For input, there is no restriction. You cannot pass a table-valued parameter to a remote stored procedure. The last alternative is really messy. The caller stores the data in a process-keyed table locally and then calls the remote procedure, passing the process-key.

The remote procedure then calls back to the first server and either selects directly from the process-keyed table, or calls a procedure on the source server with INSERT-EXEC. For an input-output scenario, the callee could write data back directly to the process-keyed table. The issue about using SET FMTONLY ON is something that I learnt from Umachandar Jayachandran at Microsoft.

SQL Server MVP Tony Rogerson pointed out that a process-keyed table should have a clustered index on the process key. Simon Hayes suggested some clarifications. Peter Radocchia suggested the cursor method.

Richard St-Aubin and Wayne Bloss both suggested interesting approaches when sharing temp tables. Thanks to SQL Server MVP Iztik Ben-Gan for making me aware of global temp tables and start-up procedures. Sankar Reddy pointed out to me that my original suggestion for XML as a solution for linked servers was flawed.

SQL Server MVP Adam Machanic made some interesting revelations about INSERT-EXEC with dynamic SQL. David Walker encouraged me to write more in depth on XML, and SQL Server MVP Denis Gobo gave me a tip on that part.

Jay Michael pointed out an error in the section on table parameters. Alex Friedman made aware of the cache-littering problem when sharing temp tables and encouraged me to write about session context. If you have suggestions for improvements, corrections on topic, language or formatting, please mail me at esquel sommarskog. MsgLevel 16, State 1, Procedure BigSalesByStore, Line 8 An INSERT EXEC statement cannot be nested.

MsgLevel 16, State 7, Procedure SalesByStore, Line 2 Column name or number of supplied values does not match table definition. MsgLevel 16, State 0, Procedure SalesByStore, Line 9 Cannot use the ROLLBACK statement within an INSERT-EXEC statement. MsgLevel 16, State 1, Line 11 Cannot process the object "EXEC tempdb.

Complex, but useful as a last resort when INSERT-EXEC does not work.